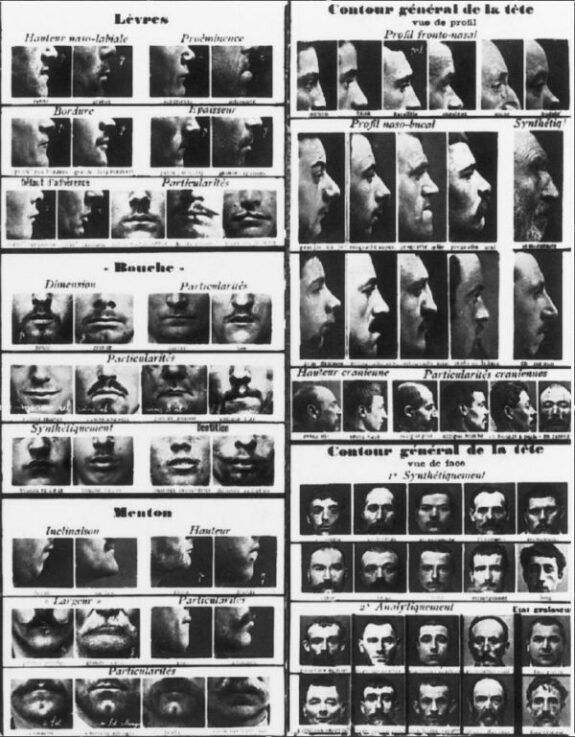

ImageNet has a collection of 21,841 categories, with a significant portion of them being innocuous. However, fixed categories inherently lack the capacity to perceive the subtle nuances and multiple connotations inherent to given nouns. Consequently, the spectrum of ImageNet's categories becomes flattened, causing valuable information residing between these defined labels to vanish. Initially, ImageNet contained a staggering 2,832 subcategories dedicated to "person," spanning attributes such as age, race, nationality, profession, socioeconomic status, behaviour, character, and even morality. Even seemingly benign classifications like "basketball player" or "professor" carry a plethora of assumptions and stereotypes, encompassing aspects like race, gender, and ability. Hence, neutral categories remain elusive, as the training datasets employed in AI inherently encapsulate a particular worldview, which subsequently influences the generated output. As pointed out by the researcher Kate Crawford: “While this approach (of ImageNet) has the aesthetics of objectivity, it is nonetheless a profoundly ideological exercise.”